Introducing video filters in Qt Multimedia

March 20, 2015 by Laszlo Agocs | Comments

Qt Multimedia makes it very easy to get a video stream from the camera or a video file rendered as part of your application's Qt Quick scene. What is more, its modularized backend and video node plugin system allows to provide hardware accelerated, zero copy solutions on platforms where such an option is available. All this is hidden from the applications when using Qt Quick elements like Camera, MediaPlayer and VideoOutput, which is great. But what if you want to do some additional filtering or computations on the video frames before they are presented? For example because you want to transform the frame, or compute something from it, on the GPU using OpenCL or CUDA. Or because you want to run some algorithms provided by OpenCV. Before Qt 5.5 there was no easy way to do this in combination with the familiar Qt Quick elements. With Qt 5.5 this is going to change: say hello to QAbstractVideoFilter.

QAbstractVideoFilter serves as a base class for classes that are exposed to the QML world and are instantiated from there. They are then associated with a VideoOutput element. From that point on, every video frame the VideoOutput receives is run through the filter first. The filter can provide a new video frame, which is used in place of the original, calculate some results or both. The results of the computation are exposed to QML as arbitrary data structures and can be utilized from Javascript. For example, an OpenCV-based object detection algorithm can generate a list of rectangles that is exposed to QML. The corresponding Javascript code can then position some Rectangle elements at the indicated locations.

Let's see some code

import QtQuick 2.3

import QtMultimedia 5.5

import my.cool.stuff 1.0

Item {

Camera {

id: camera

}

VideoOutput {

source: camera

anchors.fill: parent

filters: [ faceRecognitionFilter ]

}

FaceRecognizer {

id: faceRecognitionFilter

property real scaleFactor: 1.1 // Define properties either in QML or in C++. Can be animated too.

onFinished: {

console.log("Found " + result.rects.length + " faces");

... // do something with the rectangle list

}

}

}

The new filters property of VideoOutput allows to associate one or more QAbstractVideoFilter instances with it. These are then invoked in order for every incoming video frame.

The outline of the C++ implementation is like this:

QVideoFilterRunnable *FaceRecogFilter::createFilterRunnable()

{

return new FaceRecogFilterRunnable(this);

}

...

QVideoFrame FaceRecogFilterRunnable::run(QVideoFrame *input, const QVideoSurfaceFormat &surfaceFormat, RunFlags flags)

{

// Convert the input into a suitable OpenCV image format, then run e.g. cv::CascadeClassifier,

// and finally store the list of rectangles into a QObject exposing a 'rects' property.

...

emit m_filter->finished(result);

return *input;

}

...

int main(..)

{

...

qmlRegisterType<FaceRecogFilter>("my.cool.stuff", 1, 0, "FaceRecognizer");

...

}

Here our filter implementation simply passes the input video frame through, while generating a list of rectangles. This can then be examined from QML, in the finished signal handler. Simple and flexible.

While the registration of our custom filter happens from the main() function in the example, the filter can also be provided from QML extension plugins, independently from the application.

The QAbstractVideoFilter - QVideoFilterRunnable split mirrors the approach with QQuickItem - QSGNode. This is essential in order to support threaded rendering: when the Qt Quick scenegraph is using its threaded render loop, all rendering (the OpenGL operations) happen on a dedicated thread. This includes the filtering operations too. Therefore we have to ensure that the graphics and compute resources live and are only accessed on the render thread. A QVideoFilterRunnable always lives on the render thread and all its functions are guaranteed to be invoked on that thread, with the Qt Quick scenegraph's OpenGL context bound. This makes creating filters relying on GPU compute APIs easy and painless, even when OpenGL interop is involved.

GPU compute and OpenGL interop

All this is very powerful when it comes to avoiding copies of the pixel data and utilizing the GPU as much as possible. The output video frame can be in any supported format and can differ from the input frame, for instance a GPU-accelerated filter can upload the image data received from the camera into an OpenGL texture, perform operations on that (using OpenCL - OpenGL interop for example) and provide the resulting OpenGL texture as its output. This means that after the initial texture upload, which is naturally in place even when not using any QAbstractVideoFilter at all, everything happens on the GPU. When doing video playback, the situation is even better on some platforms: in case the input is already an OpenGL texture, D3D texture, EGLImage or similar, we can potentially perform everything on the GPU without any readbacks or copies.

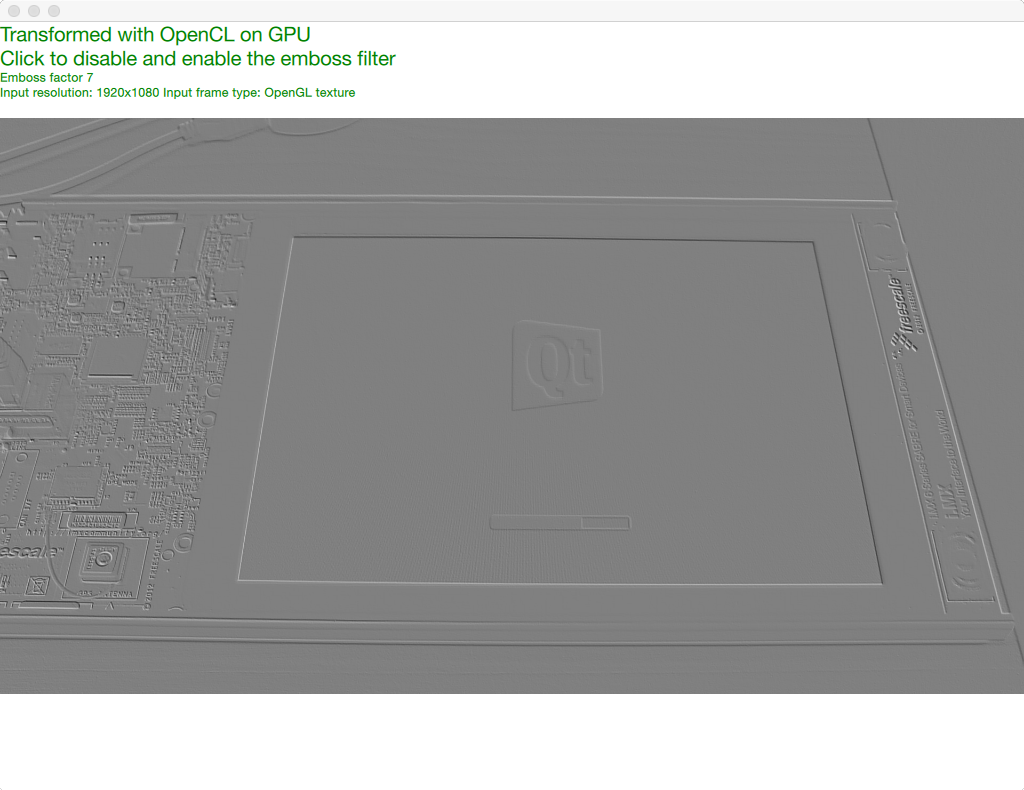

The OpenCL-based example that comes with Qt Multimedia demonstrates this well. Shown below running on OS X, the input frames from the video already contain OpenGL textures. All we need to do is to use OpenCL's GL interop to get a CL image object. The output image object is also based on a GL texture, allowing us to pass it to VideoOutput and the Qt Quick scenegraph as-is.

Real-time image transformation on the GPU with OpenCL, running on OS X

Real-time image transformation on the GPU with OpenCL, running on OS X

While the emboss effect is not really interesting and can also be done with OpenGL shaders using ShaderEffect items, the example proves that integrating OpenCL and similar APIs with Qt Multimedia does not have to be hard - in fact the code is surprisingly simple and yet so powerful.

It is worth pointing out that filters that do not result in a modified image and are not interested in staying in sync with the displayed frames do not have to block until the computation is finished: the implementation of run() can queue the necessary operations without waiting for them to finish. A signal indicating the availability of the computation results is then emitted later, for example from the associated event callback in case of OpenCL. All this is made possible by the thread-awareness of Qt's signals: the signal emission will work equally well regardless of which thread the callback is invoked on.

CPU-based filtering

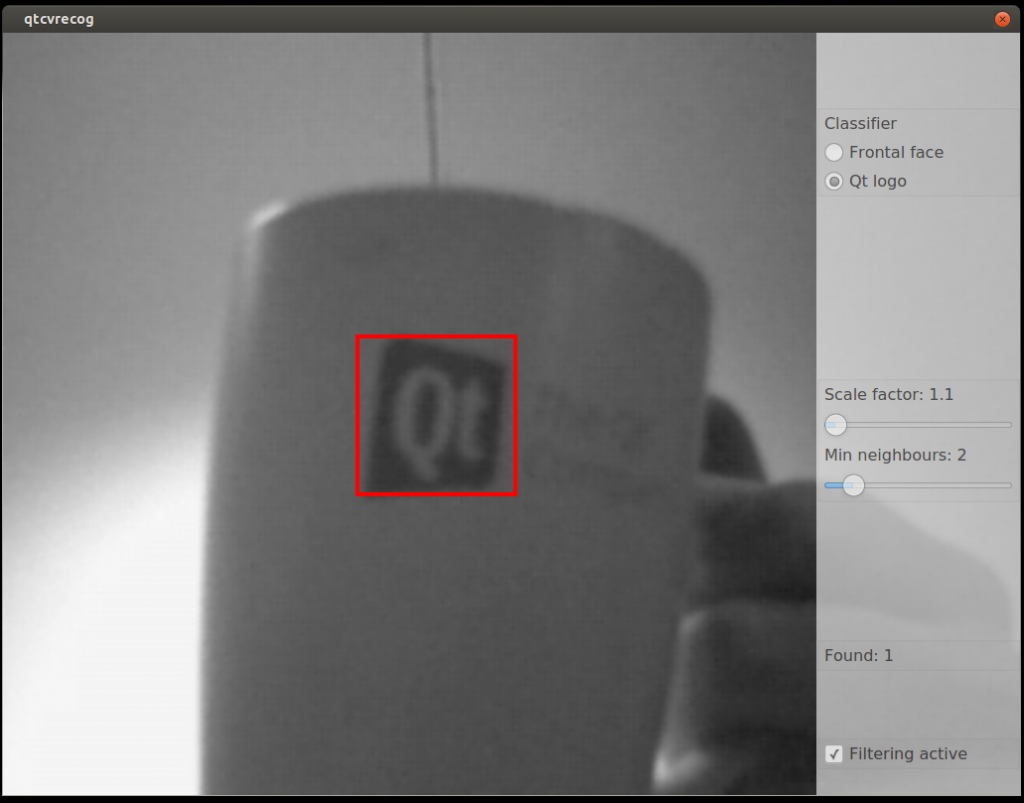

Not all uses of video filters will rely on the GPU. Today's PCs and even many embedded devices are powerful enough to perform many algorithms on the CPU. Below is a screenshot from the finished version of the code snippet above. Instead of faces, we recognize something more exciting, namely Qt logos:

Qt logo recognition with OpenCV and a webcam in a Qt Quick application using Qt Multimedia and Qt Quick Controls.

Qt logo recognition with OpenCV and a webcam in a Qt Quick application using Qt Multimedia and Qt Quick Controls.

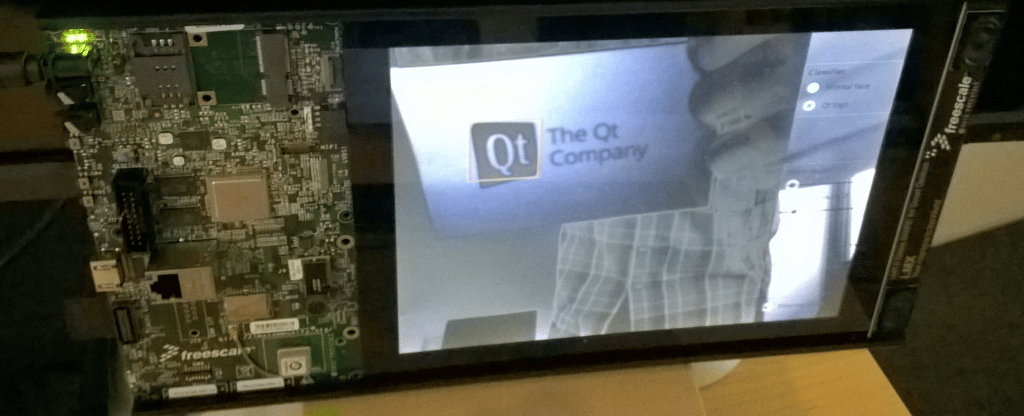

The application's user interface is fully QML-based, even the rectangles are actual Rectangle elements. It is shown here running on a desktop Linux system, where the video frames from the camera are provided as YUV image data in system memory. However, it functions identically well on Embedded Linux devices supported by Qt Multimedia, for example the i.MX6-based Sabre SD board. Here comes the proof:

The same application, using the on-board MIPI camera

The same application, using the on-board MIPI camera

And it just works, with the added bonus of the touch-friendly controls from the Flat style.

Blog Topics:

Comments

Subscribe to our newsletter

Subscribe Newsletter

Try Qt 6.7 Now!

Download the latest release here: www.qt.io/download.

Qt 6.7 focuses on the expansion of supported platforms and industry standards. This makes code written with Qt more sustainable and brings more value in Qt as a long-term investment.

We're Hiring

Check out all our open positions here and follow us on Instagram to see what it's like to be #QtPeople.