Using Docker to test Qt for WebAssembly

March 05, 2019 by Maurice Kalinowski | Comments

There has been lots of excitement around WebAssembly and more specifically Qt for WebAssembly recently. Unfortunately, there are no snapshots available yet. Even if there are, you need to install a couple of requirements locally to set up your development environment.

I wanted to try it out and the purpose of this post is to create a developer environment to test a project against the current state of this port. This is where docker comes in.

Historically, docker has been used to create web apps in the cloud, allowing to scale easily, provide implicit sand-boxing and being lightweight. Well, at least more lightweight than a whole virtual machine.

These days, usage covers way more cases

- Build Environment (standalone)

- Development Environment (to create SDKs for other users)

- Continuous Integration (run tests inside a container)

- Embedded runtime

Containers and Embedded are not part of this post, but the highlights of this approach are

- Usage for application development

- Resources can be controlled

- Again, sandboxing / security related items

- Cloud features like App Deployment management, OTA, etc…

We will probably get more into this in some later post. In the meantime, you can also check what our partners at Toradex are up to with their Torizon project. Also, Burkard wrote an interesting article about using docker in conjunction with Open Embedded.

Let’s get back to Qt WebAssembly and how to tackle the goal. The assumption is, that we have a working project for another platform written with Qt.

The target is to create a container capable of compiling above project against Qt for WebAssembly. Next, we want to test the application in the browser.

1. Creating a dev environment

Morten has written a superb introduction on how to compile Qt for WebAssembly. Long-term we will create binaries for you to use and download via the Qt Installer. But for now, we aim to have something minimal, where we do not need to take care of setting up dependencies. Another advantage of using docker hub is that the resulting image can be shared with anyone.

The first part of the Dockerfile looks like this:

FROM trzeci/emscripten AS qtbuilder

RUN mkdir -p /development

WORKDIR /development

RUN git clone --branch=5.13 git://code.qt.io/qt/qt5.git

WORKDIR /development/qt5

RUN ./init-repository

RUN mkdir -p /development/qt5_build

WORKDIR /development/qt5_build

RUN /development/qt5/configure -xplatform wasm-emscripten -nomake examples -nomake tests -opensource --confirm-license

RUN make -j `grep -c '^processor' /proc/cpuinfo`

RUN make install

Browsing through the Docker Hub shows a lot of potential starting points. In this case, I’ve selected a base image which has emscripten installed and can directly be used in the follow up steps.

The next steps are generally a one-to-one copy of the build instructions.

For now, we have one huge container with all build artifacts (object files, generated mocs, …), which is too big to be shared and those artifacts are unnecessary to move on. Some people tend to use volume sharing for this. The build happens on a mount from the host system to the image and install copies them into the image. Personally, I prefer to not clobber my host system for this part.

In the later versions of Docker, one can create multi-stage builds, which allow to create a new image and copy content from a previous one into it. To achieve this, the remaining Dockerfile looks like this:

FROM trzeci/emscripten AS userbuild

COPY --from=qtbuilder /usr/local/Qt-5.13.0/ /usr/local/Qt-5.13.0/

ENV PATH="/usr/local/Qt-5.13.0/bin:${PATH}"

WORKDIR /project/build

CMD qmake /project/source && make

Again, we use the same base container to have em++ and friends available and copy the installation content of the Qt build to the new image. Next, we add it to the PATH and change the working directory. The location will be important later. CMD specifies the execution command when the container is launched non-interactively.

2. Using the dev environment / Compile your app

The image to use for testing an application is now created. To test the build of a project, create a build directory, and invoke docker like the following

docker run --rm -v <project_source>:/project/source -v <build_directory>:/project/build maukalinow/qtwasm_builder:latestThis will launch the container, call qmake and make and leave the build artifacts in your build directory. Inside the container /project/build reflects as the build directory, which is the reason for setting the working directory above.

To reduce typing this each time, I created a minimal batch script for myself (yes, I am a Windows person :) ). You can find it here.

3. Test the app / Containers again

Hopefully, you have been able to compile your project, potentially needing some adjustments, and now it is time to verify correct behavior on runtime in the browser. What we need is a browser run-time to serve the content created. Well, again docker can be of help here. With no specific preference, mostly just checking the first hit on the hub, you can invoke a runtime by calling

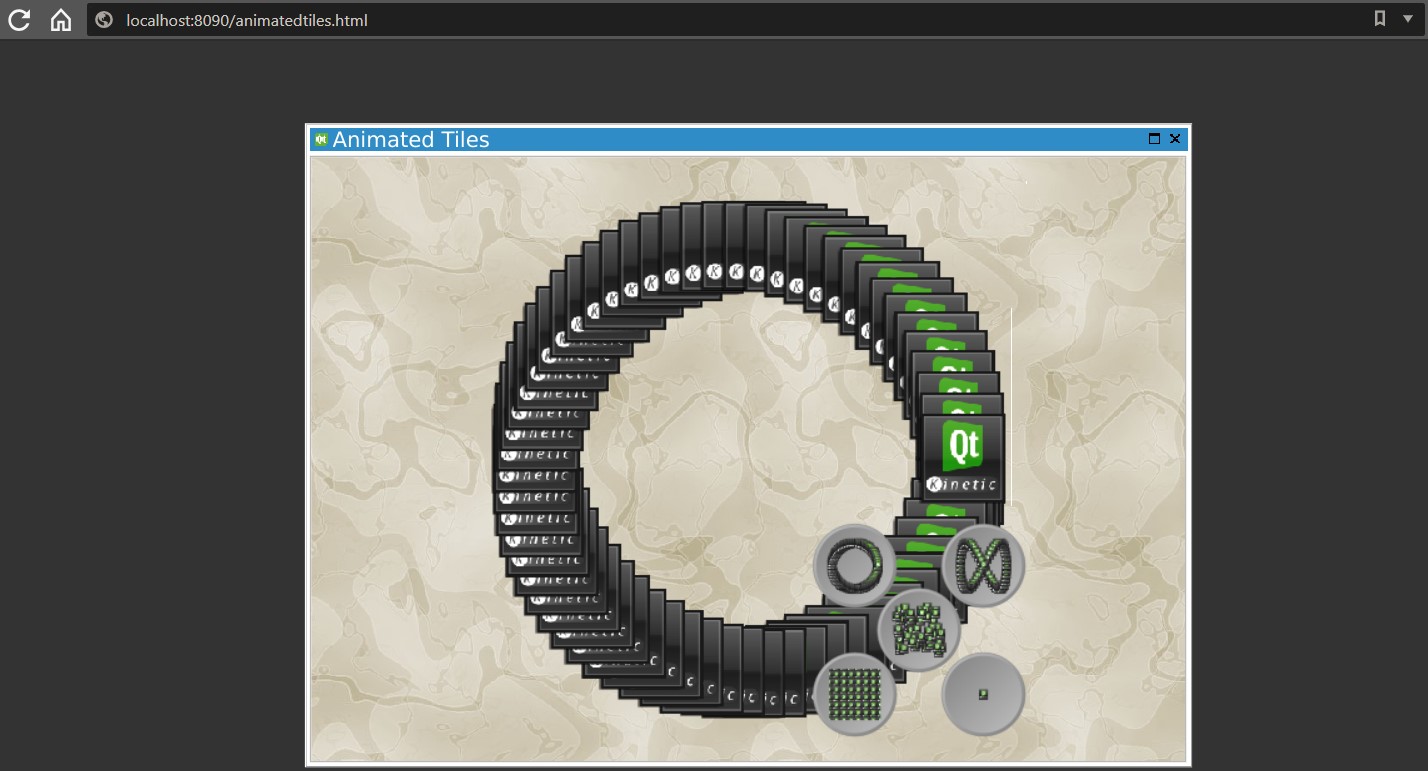

docker run --rm -p 8090:8080 -v <project_dir>:/app/public:ro netresearch/node-webserverThis will create a webserver, which you can browse to from your local browser. Here’s a screenshot of the animated tiles example

Also, are you aware that there is also Qt Http Server being introduced recently? It might be an interesting idea to encapsulate it into a container and check whether additional files can be removed to minimize the image size. For more information, check our posts here.

If you want to try out the image itself you can find and pull it from here.

I’d like to close this blog asking for some feedback from you readers and get into some discussion.

- Are you using containers in conjunction with Qt already?

- What are your use-cases?

- Did you try out Qt in conjunctions with containers already? What’s your experience?

- Would you expect Qt to provide “something” out-of-the-box? What would that be?

Blog Topics:

Comments

Subscribe to our newsletter

Subscribe Newsletter

Try Qt 6.7 Now!

Download the latest release here: www.qt.io/download.

Qt 6.7 focuses on the expansion of supported platforms and industry standards. This makes code written with Qt more sustainable and brings more value in Qt as a long-term investment.

We're Hiring

Check out all our open positions here and follow us on Instagram to see what it's like to be #QtPeople.